Understanding the cost implications of implementing Microsoft Fabric is crucial for organisations transitioning to this unified analytics platform. This guide explores the fundamentals of Fabric’s billing model, helping you make informed decisions about resource allocation and cost management. Microsoft Fabric costs is a difficult topic, apparently, let’s make it a bit more understandable!

The Basics of Microsoft Fabric Costs

Understanding Capacity Units (CUs) as the fundamental billing metric

Capacity Units are the fundamental piece of Microsoft Fabric’s billing system. They are an abstraction of compute power in the Fabric capacity. Think of Capacity Units as CPU cores (it’s not the same, but it gives you an idea of how to approach this abstract topic).

The Microsoft Fabric billing follows the CU model. You can purchase ‘capacities’ that give you a certain amount of Capacity Units. The pricing model is basically a list of SKUs that run from F2 all the way to F2048, each one doubling the previous. So the scale is F2, F4, F8, …, F512, F1024, F2048.

Capacity Unit Seconds vs Capacity Units, what’s what?

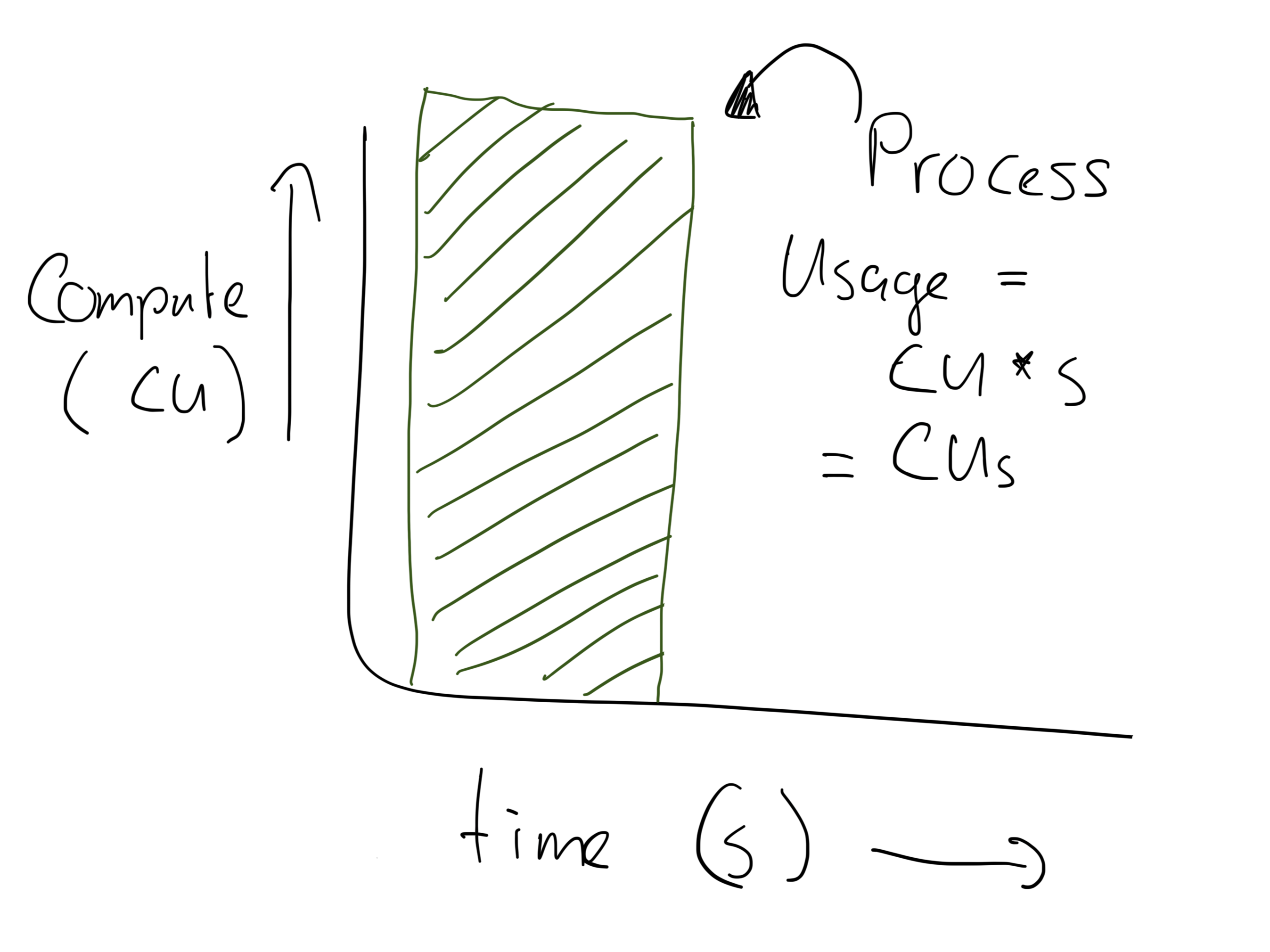

Microsoft Fabric measures compute power over time in Capacity Unit seconds, abbreviated as CU(s). This is not the plural of CU. If Microsoft had asked me, I would have called this CUsec—but they didn’t, so here we are. But anyway. Every time you see CU(s), think: Compute Unit * seconds.

That means that an F2 capacity, with 2 Compute Units, gives you 2 CUs per second. Or 172,800 CUs per day (86,400 seconds in a day, times 2 Compute Units). An F64 capacity will give you 5,529,600 CUs of compute resources per day, etc.

Every little bit of compute that happens on your Fabric capacity consumes compute power. A number of Compute Units are busy for a number of seconds, resulting in a number of CUs consumed.

An example would be a query that you run, using a PySpark notebook, that consumes 50 Capacity Units for a total of 10 minutes. In that case, you would have consumed 50 CU * 10 min * 60 sec/min = 30,000 Compute Unit seconds (CUs) for that query.

Mind you, even though your capacity comes with 2 Compute Units for an F2, or whatever the F-number is, your queries can use way more Compute Units for a short amount of time. At the end of the day, Microsoft Fabric bills your CU usage against your total available CUs. You can read more on this topic in the next main paragraph of this post.

How Fabric’s consumption-based model differs from traditional licensing

Traditional cloud data warehousing follow a more direct model in which you allocate resources, pay for them, and can use them. A classic example would be the Azure SQL Database, that I have used extensively in the past to build data platforms.

Let’s assume we have deployed our SQL Database in the DTU model, then we simply get a reserved amount of compute. If we allocate 100 DTUs of compute power, a query might use 100% of the available compute resources. If we would then scale up to 200 DTU, the query might consume 80% (= 160 DTUs), therefore running faster.

In a traditional billing model like that, you allocate fixed resources and consume them. If you don’t allocate enough resources for a workload, Fabric might throttle it, causing performance to degrade.

Microsoft Fabric costs work a bit differently, in the sense that you purchase compute resources, not a fixed amount of compute power. As I mentioned in the previous paragraph, even if you’re on an F2 capacity, your workload can consume 10 Capacity Units for a short amount of time. You would simply use up your available CUs (Compute Unit seconds, remember!) a bit faster.

Pay-as-you-go Microsoft Fabric Costs

In Microsoft Fabric, your costs depend on the capacity you choose. Microsoft prices Capacity Units by the hour, with rates varying based on your Azure data center and currency.

Let’s assume I’m in the West Europe data center and I pay in euros (EUR). At the time of writing, the Microsoft list price for this data center is 0.2115 EUR per Capacity Unit per hour. An F2 therefore costs 0.423/hour, an F64 costs 13.513 EUR/hour, etc.

Microsoft Fabric charges per second, with a minimum of a minute, applying the highest capacity you used within that time—whether you paused, resumed, or scaled your capacity up or down.

From this pay-as-you-go (paygo) model, you get a lot of flexibility. Do you need a capacity only for specific development purposes? Create one, pause it, and only resume when you need it. No need for a costly monthly commitment, you only pay the hours you use.

Another nice option is to bump up the capacity on busy days. Let’s say the first Monday of the month is super busy, then make sure to scale up the capacity to prevent outages. But, you won’t have to pay full months on this higher capacity, just the hours or days you use it.

On the other hand, this flexibility comes at a cost. The paygo model isn’t exactly cheap. For Microsoft, it is helpful if you could lock down your usage instead of having a fully flexible model that you can scale up and down when you need it.

Committing to 12 months for a discounted rate

For that reason, Microsoft created Reserved Instances for the Fabric capacities. The billing for Reserved Instances goes through the Azure Reservations portal. They are purely a billing concept, not a different type of capacity. The Fabric F64 paygo is the same capacity as an F64 reserved instance.

So, how does that work?

In the Azure Reservations portal, you buy a yearly reservation for a single Capacity Unit or for more than one at the same time. You will receive a discount of approximately 40% on the paygo price for the units, but, you must pay for a full year. The choice is yours to prepay the year or pay monthly, but in either case you have a yearly commitment.

Let’s say you buy a reservation for 16 Capacity Units. You pay for it upfront, and then you buy a Microsoft Fabric F16 capacity. Your prepaid 16 Capacity Units automatically cover the first 16 units of your capacity, reducing your bill. That means that you will not pay for any paygo usage on that F16 capacity.

Example of billing with reservations

Now if you were to bump the capacity to an F64 for exactly one hour, the billing will work as follows.

Next month, Microsoft Fabric will calculate your usage by multiplying the hours by the number of Capacity Units used during those hours. For one hour, that will be 64, for the others, that will be 16. Microsoft Fabric adds up the total usage and subtracts the prepaid reserved Capacity Units. You will receive a bill of 1 hour times 48 Units (because you already prepaid 16 out of the 64 consumed units).

If you spec the environment properly, this will result in a 30-40% discounted rate on your Microsoft Fabric Costs. Because, let’s be honest, a data platform has a lifetime of more than 1 year, so committing to at least a year of spending is not a bad idea.

Your Microsoft partner (that could be me!) can help also help you set this up and handle billing for you. Send me a message if you want to have a quick chat about the services my company offers.

Bursting and Smoothing Explained

Fabric compute is given to you a bit different than you might be used to in on-premises or traditional cloud (PaaS) offerings.

In the past, you would buy a server with a fixed number of CPU cores, memory, and so on. If your queries were heavy and maxing out the available resources, they would take longer to execute than if you would have bought more hardware.

The same for PaaS (platform as a service) cloud offerings like Azure SQL, you buy vCores or DTUs and that’s it. A heavy query might max out a 100 DTU database, and run twice as fast on a 200 DTU database.

With Fabric, that works a little bit differently. When you purchase the capacity, you are effectively getting a pool of Capacity Unit seconds. I wrote about this in the chapter above, on Microsoft Fabric Costs.

And yes, you do get a number of parallel Spark sessions, so yes, larger capacities could potentially offer better performance, but that’s not always the case. This is because of bursting and smoothing.

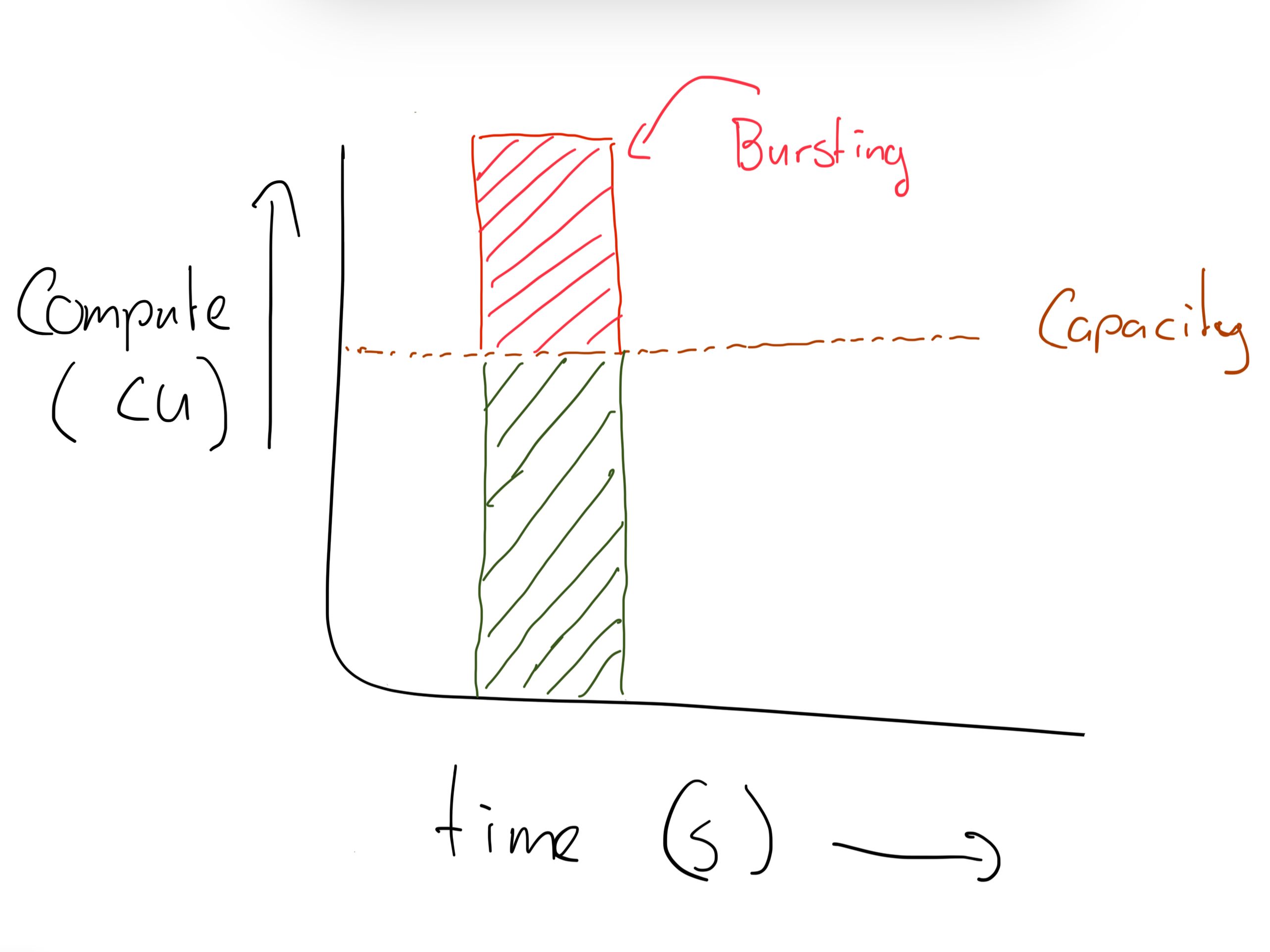

Bursting

Bursting in Microsoft Fabric means temporarily using more compute than you have available based on your capacity. Let’s assume you’ve purchased a Fabric F2 capacity. That means you can use 2 Capacity Units.

If you run a very heavy process, in a dataflow, pipeline, or notebook, it might be possible that your environment is ‘bursting’ its compute resources above these 2 Capacity Units you have available.

This ensures that operations are completed in a timely manner, however, it also opens up a risk of over consumption on your capacity.

Because when your process takes 20 Capacity Units, you think you might have nothing left for other processes that might run on your F2 capacity.

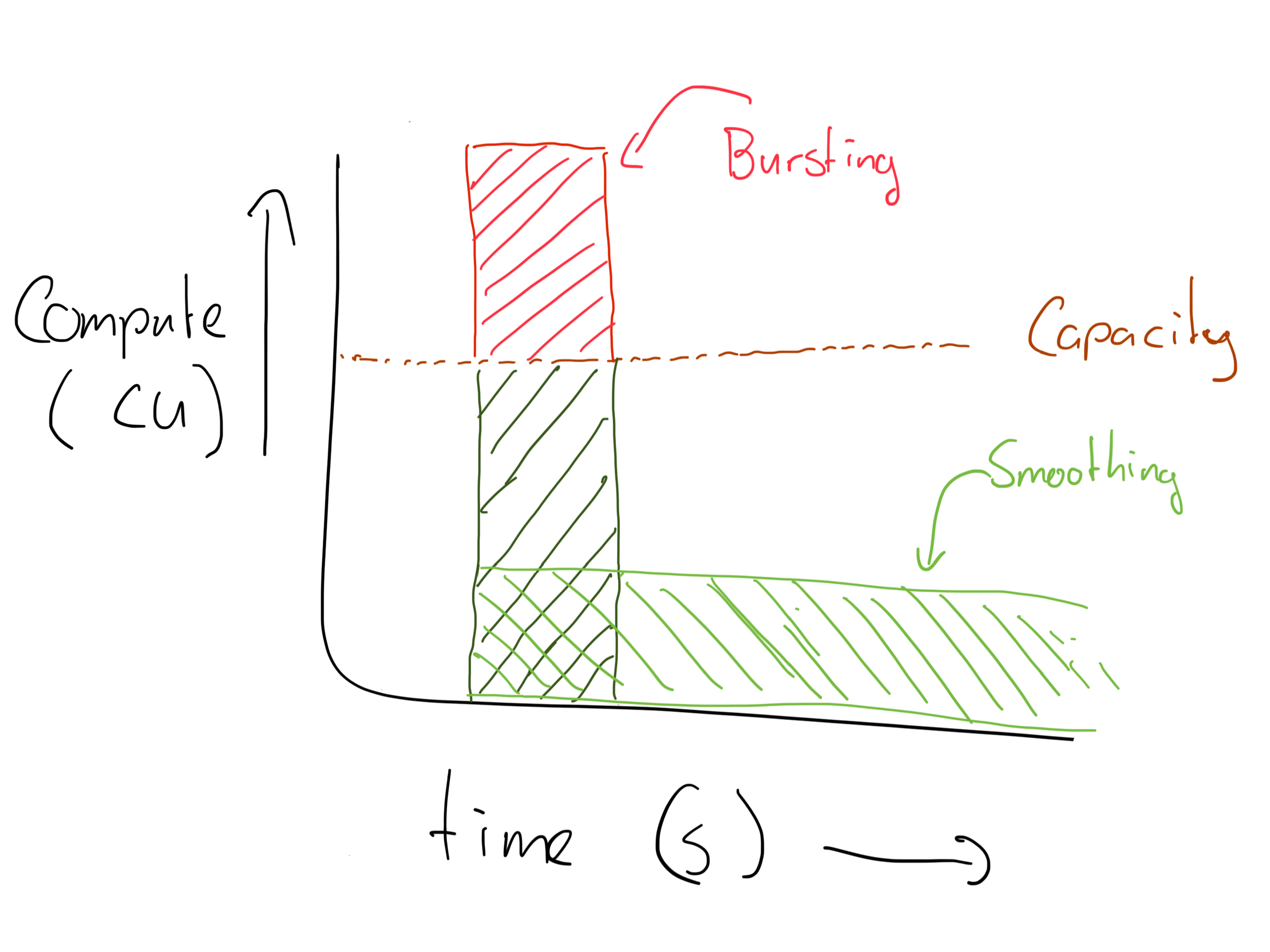

This is where smoothing comes into play.

Smoothing

Smoothing means that consumption is smoothed out over time. For background operations this means that an operation’s compute consumption is smoothed out over the next 24 hours. This ensures that you will pay for your bursted performance.

Please note that the 24 hour smoothing occurs for background operations, such as scheduled pipelines and notebooks. For other operations, such as rendering a Power BI report page in the Service, are considered ‘interactive’. For interactive operations a 10 minute smoothing is applied. Please refer to the official Microsoft documentation on background and interactive operations.

So yes, your process might consume 20 Capacity Units even on an F2 capacity and be 10 times faster, you won’t get this from Microsoft. You will pay for it.

What happens: let’s say your process ran for 5 minutes at 20 Capacity Units of power. That means you consumed 5*60*20 = 6,000 Capacity Unit seconds (CU(s)).

These are smoothed out over the next 24 hours. That means that in your allowance of 172,800 CU(s) you get per day with your F2, you’ve used up 6,000 CU(s) already.

This smoothing of operations makes sure you can size your Fabric environment on average load, instead of on peak load as you would have in the past. That is amazing!

If your usage exceeds your allowance after smoothing, Fabric might throttle your capacity. That could mean, in the worst case scenario, that your capacity will be unavailable for report consumers, Spark jobs, etc. Keep this in mind and please, monitor!

Pausing the capacity

Now when your capacity is overused and throttled so that everything is offline, there is a trick to unlock your capacity again.

In order to unlock your capacity again, you will need to go to the Azure portal, pause your Fabric capacity, and restart it. Then wait for a bit and all your overusage will magically be gone. It will also magically appear on your next invoice, but that’s only fair. At least you can use the capacity again!

Microsoft Fabric Costs Management and Monitoring

Using the Fabric Capacity Metrics app

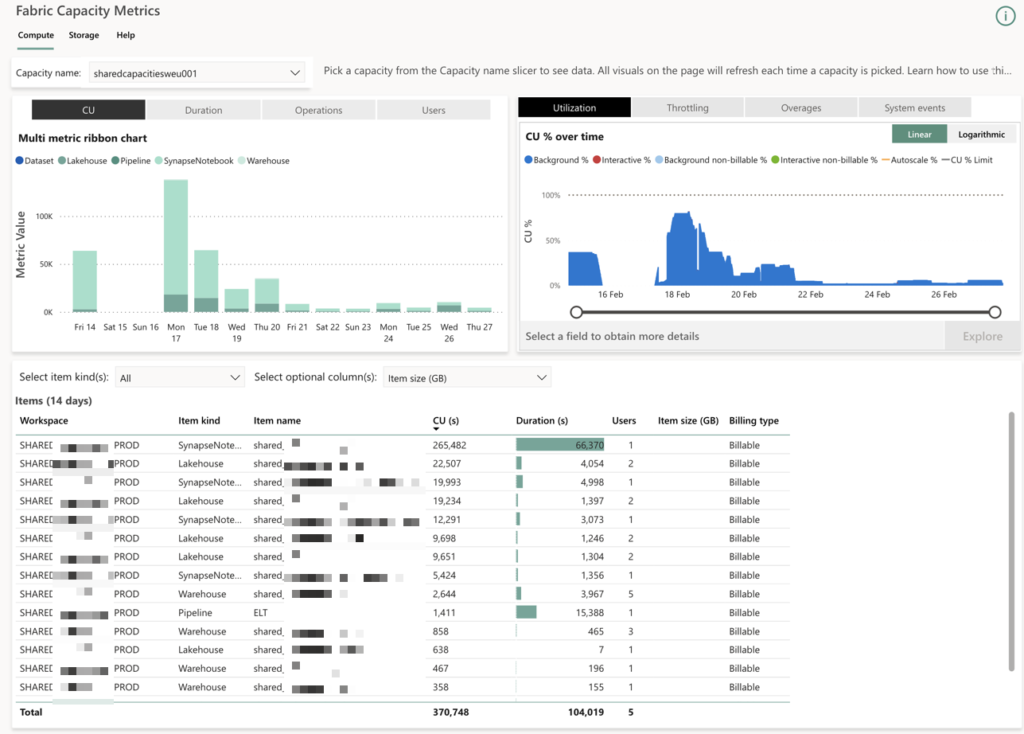

Monitoring your cloud spend can be tricky. Luckily, with Fabric, Microsoft have built a report that gives you insights in the CU(s) usage of your environments.

Let’s look at this report:

The image above shows a screenshot of the Capacity Metrics App, that gives insight in the CU(s) consumption of a Fabric Capacity. In this case, a small F2 capacity is used to extract data daily out of a SaaS application using their REST APIs.

The data is extracted in an incremental way, using notebooks, and then processed into a silver (time travel) and gold (dimension model) lakehouse using the medallion architecture.

Because we’re using notebooks and no data flows or data pipelines, the overall setup is quite cheap in terms of CU(s) usage. As you can see, even on this very small F2 capacity, we are way under the maximum allowance. On Monday the 17th we ran a full load that took about 140k CU(s), but in incremental days we rarely go above 5k CU(s).

When you start running over your capacity, there are options in the report to check where you went over capacity and when the burndown will be done.

Understanding billing reports and usage patterns

Understanding the billing of your Microsoft Fabric costs is key to optimising your spend. What you need to understand is which processes use up your CU(s) allowance, and how these patterns evolve over time.

Tracking Microsoft Fabric costs effectively means understanding how your capacity is being used and where your CU(s) consumption is going. The Fabric Capacity Metrics app provides a detailed breakdown of usage, helping you identify patterns and potential optimisations.

A key aspect to monitor is how your workload spreads throughout the month. Busy moments within the day are carefully smoothed out, but it is more difficult to plan for some busy days and some less busy days.

One of the most useful insights from the available reports is burn down tracking. This tells you when an overused capacity is available again. Preventing over usage is the best, but when it happens you need insights in order to find out how to proceed.

By keeping an eye on your usage patterns, and optimising workloads, you can ensure predictable performance while controlling costs in Microsoft Fabric.

Microsoft Fabric Cost Optimisation Strategies

Choosing the appropriate tools

Choosing the correct workloads is key to optimising your Microsoft Fabric Costs. You get plenty of tools with Fabric, and it’s easy to pick sub optimal ones.

For example, you could copy data from one lakehouse table to another lakehouse table using Dataflows, using Data Pipeline copy activities, or using PySpark in a notebook. The three options all have different impacts on your CU usage.

I find it difficult to make definitive statements, but, in general, I can assure you that the more low-code you use, the more CU you use. The opposite is also true, the more pro-code (i.e. Notebooks) you use, the less CU you will typically use.

If you have the technical skills, I would recommend you to use PySpark notebooks for everything you do, unless that is not possible. One example where I still use Data Pipeline copy activities is when ingesting data out of on-premises databases. Notebooks cannot read data from an on-prem database, so I’ll accept the higher compute usage for these tasks.

Implementing scaling effectively

You can implement auto-scaling for most Azure resources quite easily. However, for Microsoft Fabric, I have not seen this option yet. That doesn’t mean you can’t scale up or down your capacity, though.

If your workload is predictable and the same day after day, month after month, you could simply pick the correct SKU and buy a reservation to lock in 40% discount.

However, if your load is higher on some days (for example, first Monday of the month), you might want to scale up your capacity accordingly.

Even though auto-scaling is not possible yet, you can programmatically scale your capacity using the Azure Resource Management APIs. You can trigger these through a pipeline, a notebook, etc. How this would work exactly is part for another article. This one is long enough as it is already.

Best practices for reducing unnecessary CU consumption

Besides choosing the correct tools and SKU, there are general patterns to optimise for CU consumption.

In general the best practice is to NOT DO THINGS YOU DON’T NEED TO DO. I see so many developers and engineers run code, copy data, etc, just because it’s convenient. If you don’t need to copy data, don’t copy the data.

If you can ingest data incrementally, don’t ingest full loads.

Repeat after me: I will not perform full loads when I don’t have to.

Create incremental patterns, not only for ingesting data into your bronze layer, but also for merging that bronze data to silver, and even to gold. Sometimes, you cannot load data to gold incrementally, but often there are options.

It pays to think about these patterns for a bit longer, in order to save on CU(s). Yes, labour time is very expensive, but it is a one time investment. Buying infrastructure on the other hand is an ongoing cost. I am a fan of Microsoft, but let’s not make them any richer than they need to be 🙂

Conclusion

To wrap things up, managing Microsoft Fabric costs comes down to understanding how Capacity Units (CUs) work and making smart decisions about consumption. Whether you stick with pay-as-you-go for flexibility or commit to a reserved instance for savings, the key is to align your capacity with actual workload demands. Keeping an eye on usage through the Fabric Capacity Metrics app helps you stay in control and avoid surprises. With the right approach, you can get the most out of Fabric without overspending.

Are you currently planning a Microsoft Fabric implementation? What cost considerations are top of mind for your organisation? Share your thoughts and questions in the comments below, and let’s discuss strategies for optimising your Fabric investment.